What are Android accessibility services? Why are needed? What superpowers offer to your app? In this first post of the series, I give an introduction to Android accessibility services.

This is the first post about a series. Make sure to check all other posts:

- Android accessibility services 101

- Writing your first android accessibility service (coming soon)

- Android accessibility services. Exploring the contents of the screen (coming soon)

- Android accessibility services. Key events (coming soon)

- Android accessibility services. Injecting global actions and gestures (coming soon)

Accessibility in the pre-smartphone era

I devoted part of my career to creating tools to provide adaptive or alternative access methods for those with physical disabilities. For many years, desktop operating systems —basically Microsoft Windows— were our target. In such a platform, you can find a range of assistive technologies suitable for almost any person with disabilities.

Back in the mid-2000s, the Tablet PC (i.e. a tablet running Microsoft Windows) provided a form of mobility, very convenient when attached to a wheelchair. Back in those days, regular mobile phones were already mainstream but were basically used for talking and texting. Accessibility basically consisted of bigger screens and keypads, Bluetooth headsets, some form of a screen reader, or, in some cases, remote controlling the phone from a (conveniently adapted) computer to answer and place calls and to send SMSes.

How the smartphone changed it all

But then the so-called smartphone changed it all. At the beginning of the 2010s, a new generation of multi-touch smart mobile devices became mainstream. iOS and Android virtually ended up killing everyone else. It was a big paradigm shift.

Now you carry a fully-fledged computer in your pocket which is permanently connected to the Internet with a web browser, messaging applications, access to social networks, high-resolution cameras, video player, GPS sensors, games, and much more. Everybody wanted a smartphone, and people with disabilities were not an exception.

But as it often happens with technology disruptions, accessibility is not usually on the priority list. Like the first versions of Windows lacked accessibility features, early versions of Android did not provide any accessibility features at all. The concept of accessibility service was introduced in Android 1.6 (Donut) and was delivered by screen readers. But it was not until Android 4.1 (Jelly Bean) when it gradually evolved to provide developers the opportunity to create its own assistive technology solutions.

Screen readers

Sight plays a central role in human-computer interaction. Therefore, it should not come as a surprise if early accessibility efforts focus on blind and low-sighted people.

If the screen of the computer cannot be seen, an alternative representation of its contents is required. For instance, a narrated description via synthetic speech. This has a profound impact on how the user interacts with the device. Without visual feedback, the mouse becomes almost useless and thus interaction should only rely on keyboard or touchscreen input.

Take for instance the navigation across menu entries, links of a website, or chunks of text in a word processor. It requires some mechanism to select those elements on the screen. A sighted user can see those elements and selection changes as the interaction progresses. For a blind person, however, these interaction cues need to be provided in an alternative way.

Furthermore, the contents of the screen change (e.g. a menu opens, an application comes to the foreground, a web page finishes to load, etc.) which also requires informing the user.

The software that provides this kind of alternative interaction is a called screen reader. It basically renders text and image content as speech or braille output and usually modifies somewhat how the user input is processed. For instance, on Android you have TalkBack.

TalkBack reacts to screen changes describing them out loud using text-to-speech and configures the touch screen in a special touch exploration mode. Touch exploration mode basically describes what is touching the user before triggering any action. It is like hovering a mouse when you move your finger across the screen.

What is an accessibility service and why is needed for?

To perform its job, a screen reader requires a way to “see” the contents of the screen, know when it changes and modify the behavior of the touch panel. These functions are provided by the so-called the accessibility API. Major operating systems provide their own accessibility API. Accessibility services are the way Android exposes its accessibility API.

Beyond screen readers: physical disabilities

People with physical disabilities require alternative access methods. Sometimes as special hardware (e.g. keyboard with bigger keys, a joystick that acts as a mouse, etc.), as special software (e.g. screen reader or face tracking), or both (e.g. switch access scanning, eye-tracking, etc.).

This might require programmatically intercepting, filtering, and/or injecting input events to the operating system like mouse clicks or keystrokes. Traditionally, these features were not part of the accessibility API but the regular OS API.

Security concerns

On Windows, and to some extent on Linux, any application could add a hook to intercept keystrokes or move and click the mouse programmatically by injecting events. In the DOS and early Windows era that was not a major concern.

With no internet, one of the major threats back in the day consisted of computer viruses and similar forms of malware whose main infection vector was software copies distributed on physical media (diskettes and CDs) and, to a lesser extent, early online services such as BBS. Common sense and reasonable up to date antivirus software were generally enough to keep those threats away.

Modern mobile devices are a different story. They were born in a world of permanent connectivity, with an ever-increasing number of online services like banking, finance, social networking, or shopping, to name a few; in which security is a major concern. Thus, allowing a third-party application to read the contents of your email inbox or hooking the keyboard to intercept keystrokes while you type a password could be a serious security threat. These nifty features that in the 90s were just available to every application to use, today require special permissions.

iOS approached this issue by just suppressing these features for third-party applications, which implies that only Apple can develop accessibility features for the iPhone and the iPad.

In Android, however, Google opted for providing a subset of these features through accessibility services so that any developer could create their own assistive technology software. An accessibility service cannot be executed unless is explicitly enabled by the user.

Of course, these restrictions can be circumvented by jailbreaking or rooting the device, but such practices do not work for most users and thus are out of the scope of this post.

Android accessibility services superpowers

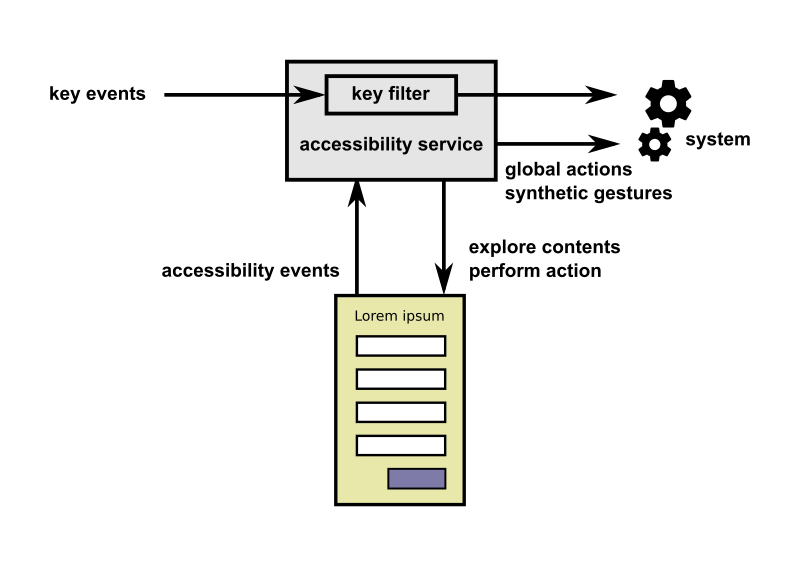

The following illustration depicts how an Android accessibility service works from a conceptual point of view. The diagram is not comprehensive but enough to understand the main features and powers an accessibility service offers to developers.

Listen to key and accessibility events

Key events are generated from devices such as physical buttons, externally attached keyboards, gamepads, and switch adapters. This is useful to observe the stream of input events before they are passed to the system and react to it accordingly. Additionally, it is possible to remove events from the stream.

Accessibility events are generated when some state transitions on the user interface are generated. For instance, when a new window is presented to the user, a button has been clicked, focus changed, etc. Accessibility events are heavily used by the screen reader to perform its function.

Explore the contents of the screen and perform actions

An accessibility service may request at any time a description of the current contents of the screen. These contents may then be examined programmatically to provide feedback (e.g. read aloud the label of a button or a paragraph of text visible on the user interface) and asked to perform some actions (e.g. click, focus, or scroll).

Perform global actions

An accessibility service might also perform the so-called global actions that, as the name suggests, are actions that are performed at the system level instead of the control level. Global actions include back, home, recents/overview, open notification drawer, or take a screenshot among others.

Inject synthetic gestures

Interaction on Android devices is based on touch events such as taps, swipes, or pinches. Therefore, people with physical disabilities that cannot use the touchscreen require alternative input methods as described above.

Take for instance a software such as EVA Facial Mouse. It tracks the motion of the face of the user to control an on-screen pointer. In this case, the user interacts with the device without touching the screen at all. Thus, when she wants to tap an icon or pinch a picture to enlarge it, EVA should execute the corresponding gesture as it was actually performed on the touchscreen.

Since Android 7.0 (Nougat API 24), accessibility services provide the ability to create and dispatch gestures to the system so that many common gestures can be performed on arbitrary positions on the screen.

Wrapping up

Android accessibility services are the way Android exposes its accessibility API. This concept has been around for many years and was primarily motived by screen readers. An accessibility API is a piece of software with special requirements that include processing user inputs and executing actions on 3rd party applications. These superpowers enter in conflict with the high degree of security modern mobile operating systems require, thus posing a challenge on mobile OS manufacturers. iOS solved this issue by not providing an accessibility API to developers. Android, however, provides the accessibility service concept which, ends up relying on the explicit user confirmation for security.

Show me the code!

In next posts I provide a closer look to those nifty features accessibility services provide going through examples.

Next post: Writing your first android accessibility service (coming soon)